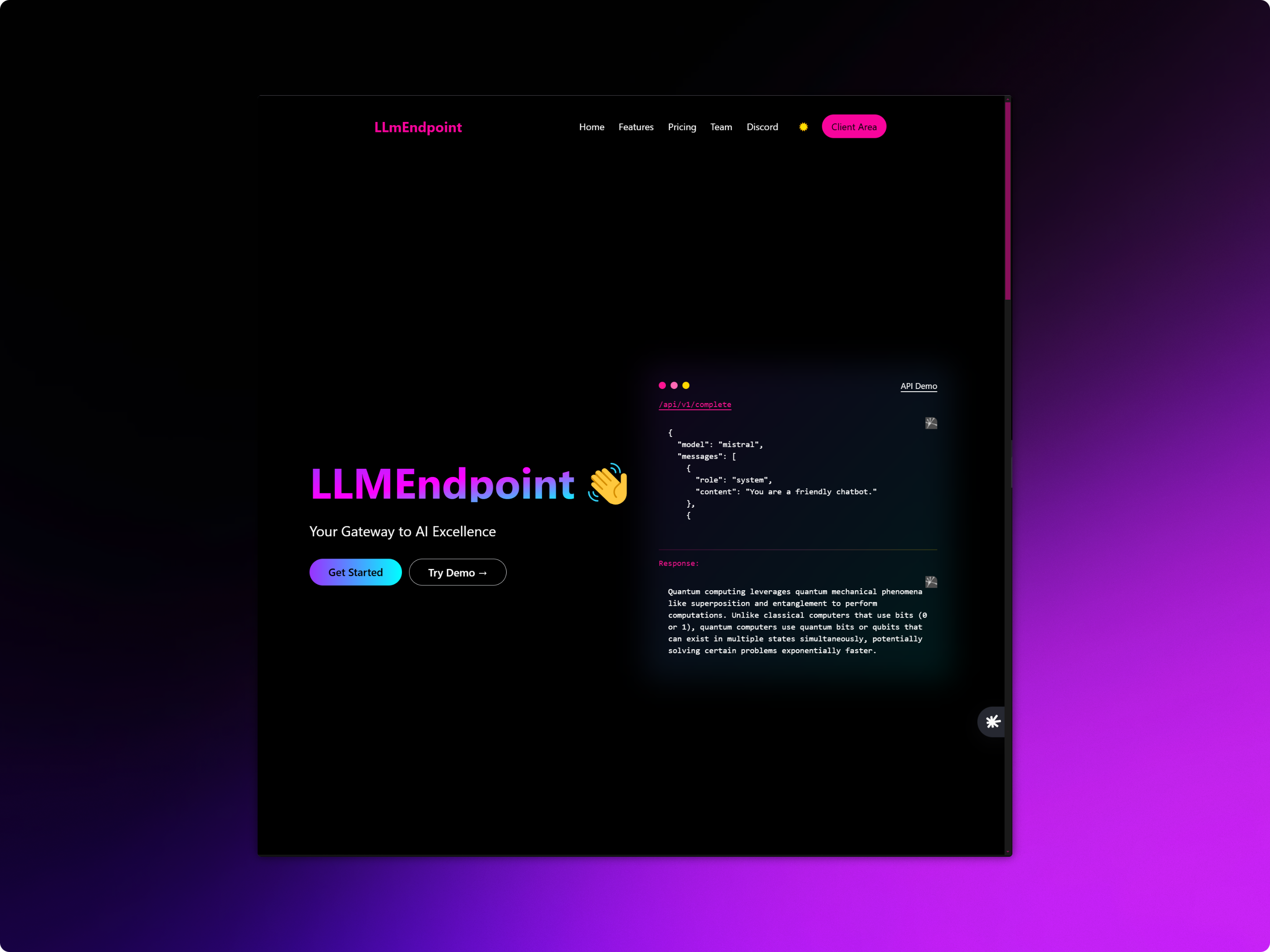

LlmEndpoint - Affordable AI Model API Platform

Engineered a cost-effective AI API platform providing access to open-source models like Llama through Ollama, serving 50k+ authenticated (JWT) monthly requests with competitive pricing and sophisticated load balancing.

I dreamed of making AI affordable for small businesses and developers who couldn't pay the big companies' high prices.

I created LlmEndpoint because AI was getting expensive and I wanted to make it cheaper for everyone. It's like opening a library where people can borrow smart AI friends instead of buying them.

The Expensive Problem

I saw that using AI was costing people lots of money. It was like having to pay $100 every time you wanted to ask a smart computer a question!

Finding Free AI

I discovered open-source AI models that were free but hard to use. It was like finding free books but they were all in a language nobody could read.

Building the Translator

I built a system using Python and Django that could talk to these free AI models and make them easy to use. Like building a translator that makes foreign books readable.

Making it Safe

I added JWT tokens - these are like special ID cards that only let the right people use the AI. No ID card, no AI access!

Speed & Scale

I added load balancing and Redis caching. Think of it like having multiple checkout lanes at a store so people don't have to wait in long lines.

Going Live

I launched it and people loved it! Soon we had 50,000+ people using it every month. It was like opening a popular restaurant that everyone wanted to visit.

50k+ monthly requests • Multi-node load balancing • Automatic failover